Introduction

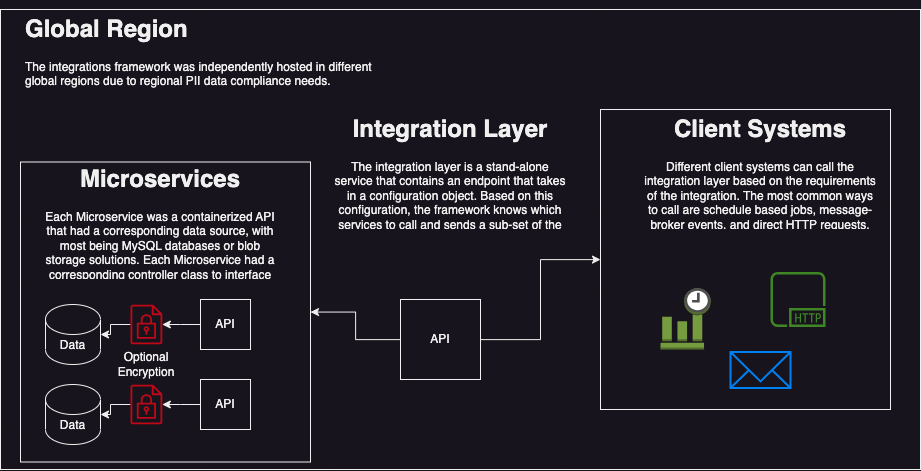

The popularity of microservice architecture has brought about a host of new opportunities and challenges. One of the significant challenges lies in creating an efficient system that enables seamless integration and communication between numerous microservices. This article aims to share my experience in designing and implementing a dynamic integration framework at a large organization, which facilitated the interaction of tens of internal microservices through a configuration approach.

The Challenge

The prime objective of the integration solution was to create a robust and flexible data access layer that connected an extensive network of both internal and external systems. With the data segregated across tens of independent microservices, each system’s requirements presented unique complexities.

A wide spectrum of needs emerged: certain systems necessitated access to large datasets spanning the full breadth of the microservices, while others demanded minimal data from just a few. Further complicating matters was the fact that data relations spanned multiple microservices, with dependencies and sequencing issues requiring specific order of calls to join data for subsequent queries.

The complexity was further escalated by the diverse set of requirements for data delivery. Each integrating system had its own unique needs in terms of cadence, format, and triggering method - be it scheduled jobs, event-driven triggers, or direct HTTP calls.

Additionally, the need to accommodate and prioritize security was paramount. Certain microservices hosted geo-specific Personally Identifiable Information (PII) data, and the solution had to be capable of decrypting this information at large volumes, with the system needing to return upwards of 90,000 records (at the time I transitioned it).

This intricate web of varying data requirements, microservice dependencies, output formats, and security considerations presented a formidable challenge. The goal was to create an integration layer capable of not only managing these complexities but doing so with scalability to accommodate future growth, as the organization was rapidly expanding in new regions.

The Design

Configuraiton Approach

While the design evolved over time, at the core was the idea that a configuration class would drive the logic behind the framework. While I cant go into the specifics of the configuration, the configuration class looked something like this…

public class Configuration

{

public string ProcessId { get; set; } // used as an explicit log correlation ID

public string ProcessName { get; set; }

// configurations to dictate if integration returns single vs many objects

public int ExplicitObjectIdTypeA { get; set; }

public int ExplicitObjectIdTypeZ { get; set; }

// configuraitons to filter data from services

public IEnumerable<string> FilterDataByTypeA { get; set; }

public IEnumerable<string> FilterDataByTypeB { get; set; }

// configurations to specify which data sets to include in a query

// in other words, which Microservices to call

public bool IncludeDataSetA { get; set; }

public bool IncludeDataSetB { get; set; }

// configuration for specific business logic processes

public bool SpecialFeatureA { get; set; }

public bool SpecialFeatureZ { get; set; }

// configurations that modified programming

public bool ModifiedExceptionHandling { get; set; }

}

This enabled great flexibility and extensibility over the types of integrations that the system could handle. The configuration object then passed down a subset of the configuration for each Microservice it called in order to dictate how the Microservice would query or calculate data.

For example, one of the Microservices had a collection of data that contained the history of changes for a record, such as:

| LocationId |

ParentObjectId |

EffectiveData |

| 1234 |

4321 |

2022-1-1 |

| 1234 |

8765 |

2021-12-1 |

In this example, the requirement I had was to output the data in a way that would give me the effective data of the record. In the example above, the effective date for LocationId is 2021-12-1 while the effective date for ParentObjectId is 2022-1-1. In reality the actual record had many properties and not all integrations needed to query for all of the properties. Therefore, in order to only calculate the exact records that we needed (for performance reasons), I introduced a configuration into the Configuration object that looked something like this:

public class Configuration

{

public IEnumerable<string> CalculatedRecordChangeProperties { get; set; }

}

Using this configuration, I was able to explicitly state which fields I wanted to calculate. In the example where I only wanted to calculate LocationId, I could simply set that in the configuration and the consuming Microservice would only execute for that property.

On the main integration layer, there is a service that orchestrates the process based on the configuration. So basically, it would only initialze services and call them if data sets for those services were requested or if they were needed to connect data behind the scenes.

Integration Object and Mapping

During runtime, the data that the integration layer collected from the various services was kept in an object that contained definitions for all datasets in addition to some helper methods.

public class IntegrationObject

{

public DataSetA SetA { get; set; }

public DataSetZ<string> SetZ { get; set; }

// helper methods for generic logic used accross integrations

public bool HelperMethodA()

{

return this.DataSetA.Property1 > 1000;

}

// methods to override or format certain data

public void OverrideData()

{

return this.DataSetA.Property1 = "Explicit Override";

}

public string FormattedData(FormatDataType type)

{

var output = this.DataSetA.Property1;

if (type == FormatDataType.TypeA)

{

// format the data and set output to this

}

return output

}

}

Once the runtime completed fetching of the data, specific integrations could take that object into the desired output model. Using a constructor based approach, I was able to easily map the data in the following manner:

public class SpecificIntegrationObject

{

public string PropertyA { get; set; }

public SpecificIntegrationObject(IntegrationObject genericObject)

{

this.PropertyA = genericObject.DataSetA.Property1;

// perform specific transformations that are needed for this integration

// if the scope is greater than this integration (other integrations could use it)

// then place that at a global level in the genericObject

}

}

Additionally, there is a helper service that contains generic functionality, such as methods to standardize logs, get authentication tokens, call expression validation services, persist job outputs for storage/review, encrypting and serializing data, among other things. The service looked something like this:

public class IntegrationHelper

{

public void SerializeToXml() {}

public void WriteFlatFile() {}

public void WriteStringFile() {}

public void PGPEncrypt() {}

public void UploadToFTPServer() {}

public void PostJobResultToIntegrationLog() {}

public void PostOutputFileToBlobStorageForLoggingPurposes() {}

public string GetAdfsToken() {}

}

This basically placed all of the generic functionality at a global level that could be leveraged by different integrations, the goal was not to re-write encryption, serialization, or token fetching logic.

Client Endpoint

The controller on the integration layer hosted a generic endpoint that allowed callers to specify certain parameters. Eventually, I was able to leverage this approach to include more request parameters, such as I did for the Dry Run functionality that I’ll describe down the line. But initially, it looked like this…

public class IntegrationRequest

{

// the integration type determined whether the integraiton would run

// synchronously (response is expected) vs asynchronously (execute job only)

public string Type { get; set; }

// the name was used as the unique identified of the integration

public string Name { get; set; }

}

The Implementation

Implementing the solution proved challenging for different reasons, but probably the most challenging was the fact that I needed to pull, manipulate, and transform upwards of 90k records from tens of Microservices in a performant manner. Some of the challenges and solutions I found during this time were:

Batching Calls to Microservices

Due to different types of timeouts (HTTP, Kubernetes, etc), I batched the calls so that the orchestrating integration layer could collect the data that it needed in chunks. This was necessary because the infrastructure behind each Microservices could vary and they did not all have the same compute resources, which resulted in the need for varying chunk sizes. While this sounds like a straight-forward approach, there were some small nuances relating to the configuration that was passed out for each call for chunk.

Using Appropriate Data Types

Clearly, when dealing with substantial volumes of data, it’s crucial to use suitable data structures. For instance, opting for a list rather than a dictionary for specific structures could significantly undermine performance.

Manual Decryption of Elements

As I previously mentioned, some of the services contained encrypted PII data that needed to be decrypted. The decryption solution that the Microservices used was based on a programmatic feature of C# called reflection, a mechanism that allows code to inspect itself at runtime. While this was nice for developers, as it enabled auto-decryption of data, it was practically un-usable for large scale integrations. After diagnosing the issue, I opted to include a more direct approach and explicitly decrypt each property. This did not damage the developer experience much, as I encapsulated the decryption logic into a re-usable method.

Logging Approach

Integration projects are suceptible to breaking for different reasons, such as an upsteream change in data or service timeouts. It is because of this that logging is extremely important for successful integration projects. The organization was using splunk for logging, which made it easy for me to be verbose with my logging approach. I found that adding a large amount of logs to the jobs made diagnosing production issues a lot easier. I relied on the correlation Id to explicitly correlate logs, whcih meant that I could simply query for a UUID and get my logs, in addition to explicit time tracking to more easily diagnose slow performance issues. Lastly, I used a keyword based approach that allowed me to easily monitor specific features that I would deploy.

Integration Dashboard

In addition to aforementioned splunk logs, the actual process would log its result and any associated data (stored as a blog) onto a table that was consumed by a dashboard. The dashboard also enabled the ability to re-run integrations and enabled users to review the data based on a role-access policy.

Dry Runs

Unfortunately, the real world is not ideal, and certain integrations were not able to be tested well in lower environments due to environment constraints. In other words, we would not know if an integration worked as expected until we tested in production. That resulted in a catch 22 problem, because we couldn’t just run the integration in production and send possibly bad data to a downstream system. My solution for this problem was to enable dry runs, or the ability to run the integration (data fetching and calculating, mapping, serializing, etc) without actually delivering it to another system. This was as simple as modifying the integration request object to include a flag for dry runs, and if this flag was true, then the delivery portion of the integration would be disabled.

public class IntegrationRequest

{

// same as before

public string Type { get; set; }

public string Name { get; set; }

// new dry run flag

public bool DryRun { get; set; }

}

Feature Flags

Similar to dry-runs, I found another set of problems that would be more easily solved if there was a way to modify behavior in production. This was important because the organization was large and deploying to production required many processes. Some examples of the problems I ran into were:

- Changing an FTP upload certificate for a vendor in production, but needing the ability to roll-back to the current certificate if the new certificate failed.

- Needing to modify the behavior of an integration that would send delta updates to a downstream system to instead do a full sync.

- Other needs for behavior modification or needing to shut off downstream updates to a system in flight.

In order to circumvent this issue, I introduced a simple table that would hold a string that represented a feature flag. We could then work with our support team to have the table record updated in production and modify the behavior of the production integration. This feature proved extremely useful as it not only solved the problems we faced, but also enabled us to more rapidly iterate on the integrations by implementing roll-back functionality.

Unlocking dynamic integrations

As our systems evolved and the organization expanded, the need for more and more integrations increased. Many internal teams needed data from our system and instead of writing more and more custom integrations, I realized we could fulfill a large number of these requests by creating dynamic HTTP integration endpoints - a feature I named Endpoints.

In order to accomplish this, I created a generic endpoint in the integration layer API and a database table that described the integration. The controller endpoint looked something like this:

[HttpPost("endpoints/{integrationName}")]

public async Task<IActionResult> EndpointIntegration(string integration, [FromBody]EndpointRequest request)

{

// get the configuration record (schema described below)

// check if the endpoint is enabled, if disabled then return

// call security service to determine if caller has appropriate permission, if not then return

// serialize configuration object and pass it down to integration service

// return response

}

On the database table, the configuration was stored as a JSON object along with a uri, which resulted in an endpoint like:

https://service/api/endpoints/integration-uri

When a client called the endpoint, their security credentials would be checked to make sure that they possessed the role-based permission required for the integration. Lastly, I added an Enabled flag that allowed us to turn of integrations, should the need arise.

| Uri |

Enabled |

SecurityPermission |

Configuration |

| integration-uri |

true |

IntegrationPermission1 |

{“ProcessName”:”IntegrationName”,”ExplicitObjectIdTypeA”:1,”ExplicitObjectIdTypeZ”:2,”FilterDataByTypeA”:[“TypeA1”,”TypeA2”],”FilterDataByTypeB”:[“TypeB1”,”TypeB2”],”IncludeDataSetA”:true,”IncludeDataSetB”:false,”SpecialFeatureA”:true,”SpecialFeatureZ”:false,”ModifiedExceptionHandling”:true} |

The End Result

While this was a simplified version of the project, the reality was more complex than what can be covered in a single blog post. Navigating the challenges such as the size of the organization, its fast-paced environment, and a large production release that required coordination with numerous teams was certainly demanding.

Despite these challenges, this remains one of my favorite projects. The framework I created is still in use today, powering a host of integrations for a multi-billion dollar enterprise. I take particular pride in the configurative and modular coding approach. This approach allowed the framework to support a wide variety of integrations, and facilitated rapid onboarding. This was clearly demonstrated when we were able to add database-driven integrations later on.

To conclude, this project underscores the value of forward-thinking and adaptability in the realm of software development, and has continued to influence my approach to problem-solving.